Unsupervised Multi-class Object Co-segmentation

with Contrastively Learned CNNs

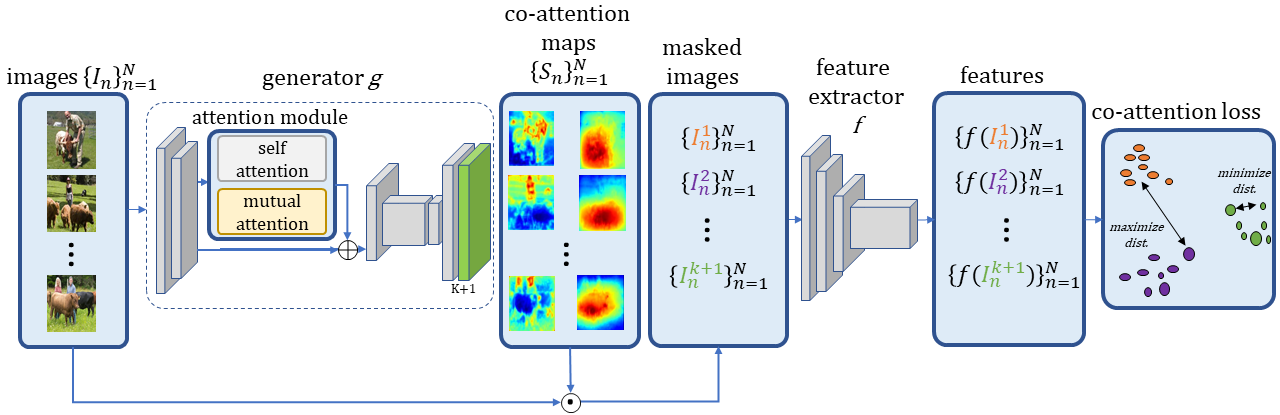

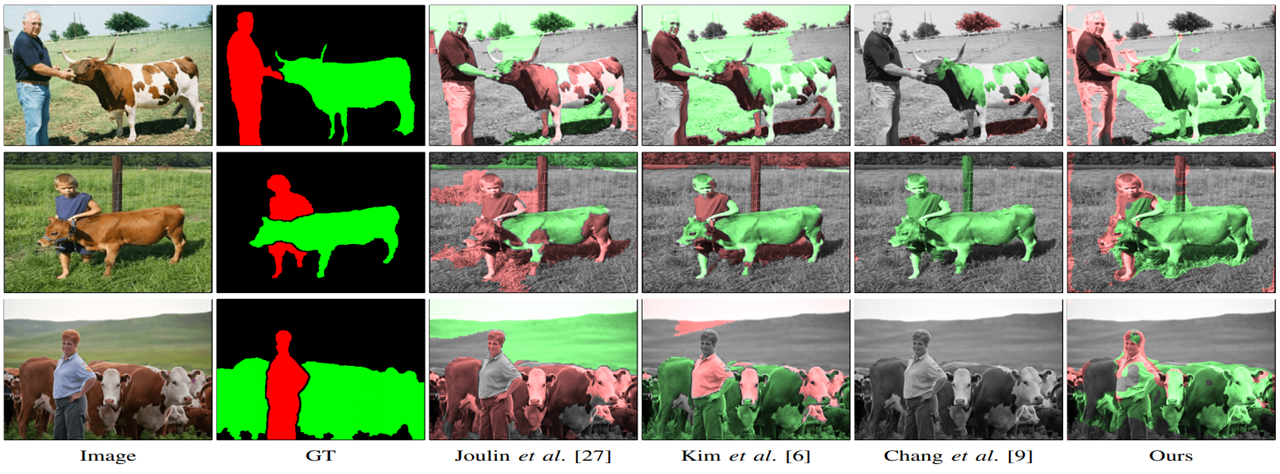

Unsupervised multi-class object co-segmentation aims to co-segment objects of multiple common categories present in a set of images in an unsupervised manner. It is challenging since there are no annotated training data of the object categories. Furthermore, there exist high intra-class variations and inter-class similarities among different object categories and the background. Our work addresses this complex problem by proposing the first unsupervised, end-to-end trainable CNNbased framework consisting of three collaborative modules: the attention module, the co-attention map generator, and the feature extractor. The attention module employs two types of attention: self-attention and mutual attention. While the former aims to capture long-range object dependency, the latter focuses on exploring co-occurrence patterns across images. With the attention module, the co-attention map generator learns to capture the objects of the same categories. Afterward, the feature extractor estimates the discriminative cues to separate the common-class objects and backgrounds. Finally, we optimize the network in an unsupervised fashion via the proposed co-attention loss, which pays attention to reducing the intra-class object discrepancy while enlarging the inter-class margins of the extracted features. Experimental results show that the proposed approach performs favorably against the existing algorithms.

temp

@inproceedings{UMCSEGwithCNNs,

author = {Lee, Kuan-Yi* and Su, Hsin-En and Lin, Ching-Yuan and Tsai, Chung-Chi and Lin, Chia-Wen and Lin, Yen-Yu},

title = {Unsupervised Multi-class Object Co-segmentation with Contrastively Learned CNNs},

booktitle = {},

year = {2021}

}

- • Kim et al. On Multiple Foreground Cosegmentation. In CVPR, 2012.

- • Joulin et al. Multi-Class Cosegmentation. In CVPR, 2012.

- • Chang et al. Optimizing the decomposition for multiple foreground cosegmentation. In CVIU, 2015.